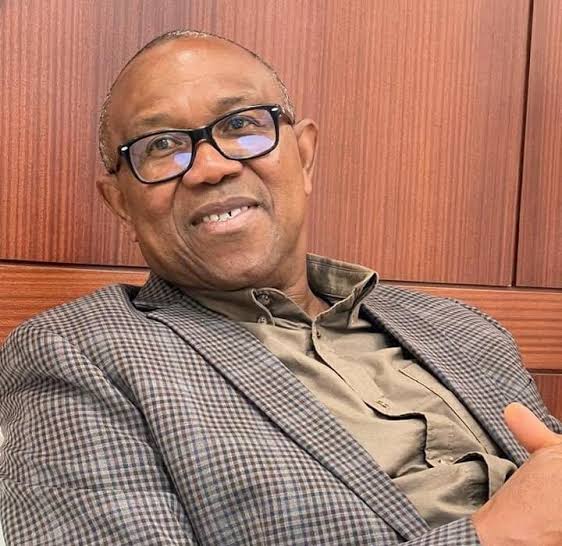

Sam Altman, chief executive officer of OpenAI, at an event in Seoul, South Korea, on Friday, June 9, 2023.

Bloomberg | Bloomberg | Getty Images

OpenAI’s ChatGPT can now “see, hear and speak” — or, at least, understand spoken words, respond with a synthetic voice and process images, the company announced Monday.

The update to the chatbot — OpenAI’s biggest since the introduction of GPT-4 — allows users to opt into voice conversations on ChatGPT’s mobile app and choose from five different synthetic voices for the bot to respond with. Users will also be able to share images with ChatGPT and highlight areas of focus or analysis (think: “What kinds of clouds are these?”).

The changes will be rolling out to paying users in the next two weeks, OpenAI said. While voice functionality will be limited to the iOS and Android apps, the image processing capabilities will be available on all platforms.

The big feature push comes alongside ever-rising stakes of the AI arms race among chatbot leaders such as OpenAI, Microsoft, Google and Anthropic. In an effort to encourage consumers to adopt generative AI into their daily lives, tech giants are racing to launch not only new chatbot apps, but also new features, especially this summer: Google has announced a slew of updates to its Bard chatbot, and Microsoft added visual search to Bing.

Earlier this year, Microsoft’s expanded investment in OpenAI — an additional $10 billion — made it the biggest AI investment of the year, according to PitchBook. In April, the startup reportedly closed a $300 million share sale at a valuation between $27 billion and $29 billion, with investments from firms such as Sequoia Capital and Andreessen Horowitz.

Experts have raised concerns about AI-generated synthetic voices, which in this case could allow users a more natural experience but also enable more convincing deepfakes. Cyber threat actors and researchers have already begun to explore how deepfakes can be used to penetrate cybersecurity systems.

OpenAI acknowledged those concerns in its Monday announcement, saying that synthetic voices were “created with voice actors we have directly worked with,” rather than collected from strangers.

The release also provided little information about how OpenAI would use consumer voice inputs, or how the company would secure that data if it were used. OpenAI did not immediately respond to a request for comment, and the company’s terms of service say that consumers own their inputs “to the extent permitted by applicable law.”