In a recent interview with CNBC’s Jim Cramer, Nvidia CEO Jensen Huang shared details about the company’s upcoming Blackwell chip which cost $10 billion in research and development to create.

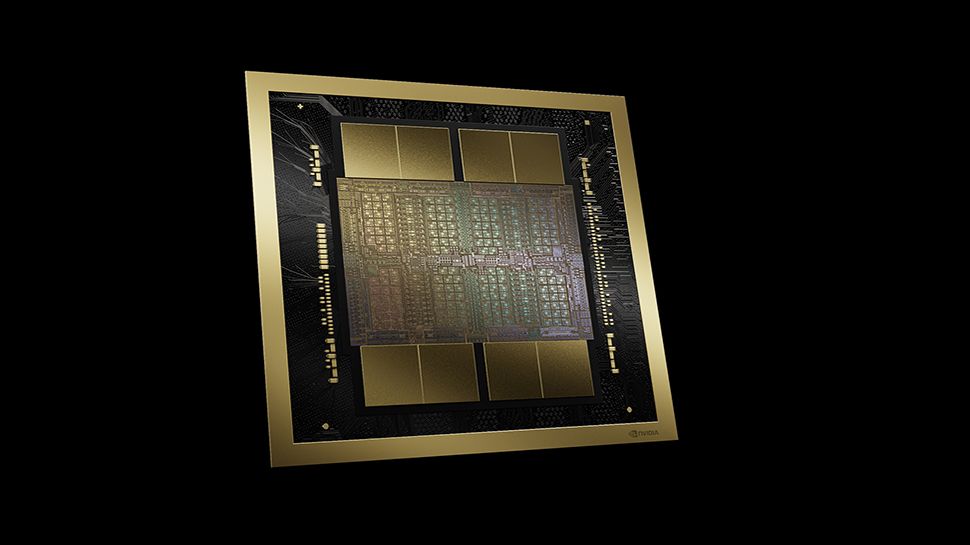

The new GPU, which is built on a custom 4NP TSMC process and packs a total of 208 billion transistors (104 billion per die), with 192GB of HMB3e memory and 8TB/s of memory bandwidth, involved the creation of new technology because what the company was trying to achieve “went beyond the limits of physics,” Huang said.

During the chat, Huang also revealed that the fist-sized Blackwell chip will sell for “between $30,000 and $40,000”. That’s similar in price to the H100 which analysts say cost between $25,000 and $40,000 per chip when demand was at its peak.

A big markup

According to estimates by investment services firm Raymond James (via @firstadopter), Nvidia B200s will cost Nvidia in excess of $6,000 to make, compared with the estimated $3320 production costs of the H100.

The actual final selling price of the GPU will vary depending on whether it’s bought directly from Nvidia or through a third party seller, but customers aren’t likely to be purchasing just the chips.

Nvidia has already unveiled three variations of its Blackwell AI accelerator with different memory configurations — B100, B200, and the GB200 which brings together two Nvidia B200 Tensor Core GPUs and a Grace CPU. Nvidia’s strategy, however, is geared towards selling million dollar AI supercomputers like the multi-node, liquid-cooled NVIDIA GB200 NVL72 rack-scale system, DGX B200 servers with eight Blackwell GPUs, or DGX B200 SuperPODs.